October has completely disappeared! I meant to post my second approach to sentiment analysis as a follow up to the first, but the month has come and gone, so I guess it will have to wait a little longer. However, I do have a couple of things to share as part of my regular update on tips and tricks that are new to me.

Did anyone else take part in Hacktoberfest? The overall point of it is to get more people contributing to open source, and as a way of doing that, the aim is to submit four pull requests in October to public GitHub repos that get accepted. It’s a really nice idea and as I haven’t pushed myself in that area before, I made a bit of a start. Mainly I was contributing to knowledge and training repos with resources I found helpful or a bit of proofreading - every little helps! And it’s definitely got me more into the pattern of considering contributing when I see things I can do.

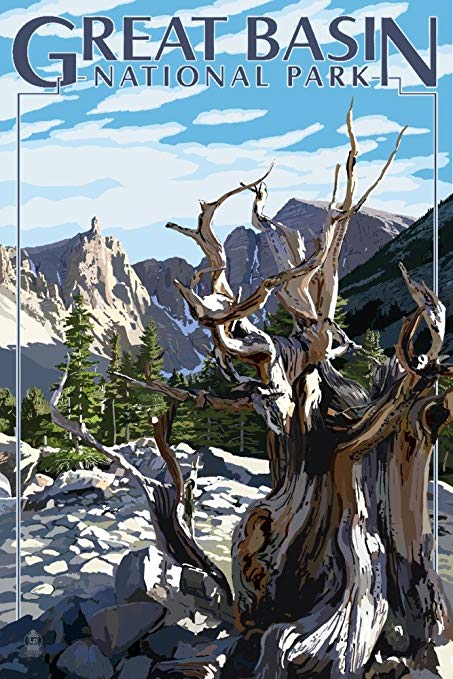

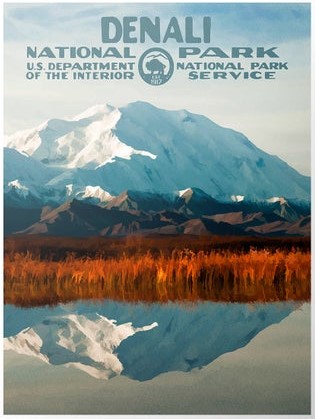

One of the projects I was really charmed by is the package nationalparkcolors by GitHub user katiejolly. This provides colour palettes based on US National Park posters, which are just gorgeous. I provided palettes for Great Basin and Denali, which were raised in the Issues.

|

|

|---|

While I was working on this, I coincidentally came across the package MapPalettes. Primarily this exists to provide palettes and functions for maps, but one function in particular caught my eye: get_colors_from_image(). As arguments, you can give a URL of an image and how many colours you want, and it will create a palette from the picture.

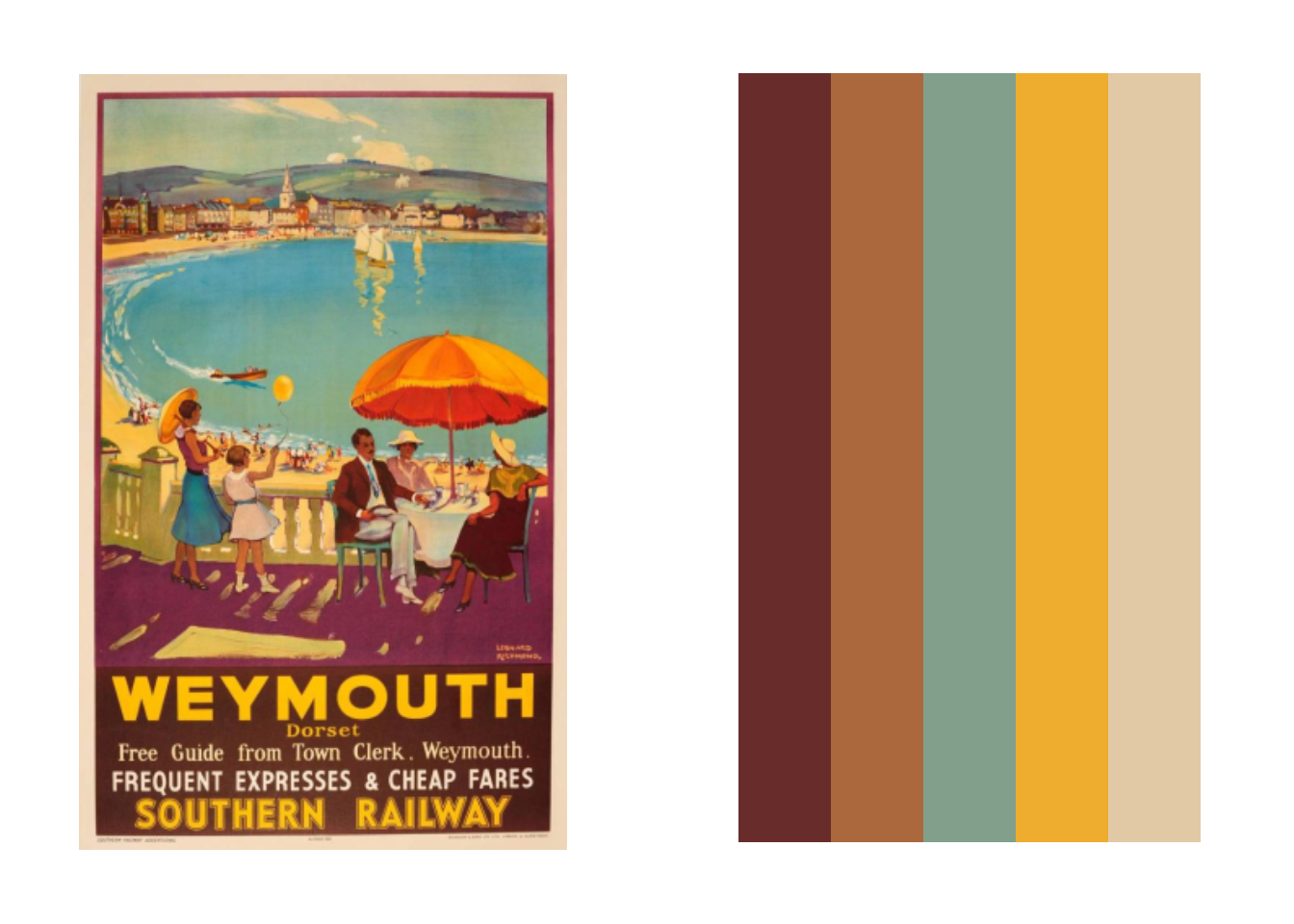

Here’s an example using a vintage British poster. To install the MapPalettes package, you have to fetch it from its GitHUb repo: remotes::install_github("disarm-platform/MapPalettes").

library(MapPalettes)

get_colors_from_image(image = "https://flashbak.com/wp-content/uploads/2017/05/1935-Southern-Railway-Travel-Advertising-Poster-For-Weymouth-Dorset-1935-644x1024.jpg",

n = 5)

## [1] "#682D2B" "#AA683C" "#819F8B" "#EEAD2E" "#E2C9A6"I could spend hours trying that with different images - so glad I’ve discovered it. Having said that, I didn’t end up using it for the nationalparks contribution because it picks out the main colour groups, which means it can miss small accent colours, like the green in the Great Basin image. Still, I’m sure I’ll use it in the future.

The other thing I wanted to write about is more of a practical fix. I’ve been using Python more in the past months, and one thing I found odd was that I couldn’t see an easy way to create several functions and apply them across a project. In R, I would have a file of a few functions saved, and then access this using source("./functions_folder/my_functions.R")in any script that needed them. But in Python, doing something like from my_functions import custom_function only works if you are in the same folder, as far as I could work out from googling around. But practically, my projects often have several subfolders, and it seems like serious overkill to have my_functions file in every subfolders. Workarounds on StackOverflow either seemed really overengineered or simply didn’t work for me. And while I’m sure creating a package could be a way of dealing with it, again that seems like a lot if the functions are really for this specific project.

Happily for me, one of my colleagues suggested a useful and more straightforward answer. It involved creating a folder to hold the files where I’d written my functions, so my project directory would look something like this.

project

│

└───some_scripts

│ first_script.ipynb

│

└───functions_folder

my_functions.pyThen, from any python notebook or file (such as first_script.ipynb above), I could include the following snippet to import my custom functions.

import sys

sys.path.append("..")

from functions_folder.my_functions import custom_functionBasically this treats the functions_folder like a package, and using that you can access subfolders and functions themselves. And now I can access these functions from anywhere in my project, without having to do anything particularly complicated.

Finally, just in before the month’s end, last night I went to an EMBOLDENHER X AI Club meetup that provided tips from software engineering for data science. This was a really engaging event with two excellent speakers. Fei Phoon told us about the importance and utility of testing your code before passing it onto data engineers, while Devon Edwards Joseph ran a workshop on using the command line for data analysis. I picked up useful tips from both; you can find Fei’s slides here and Devon’s tutorial here.

That’s it for this time. Hopefully I’ll be able to post the next steps in the sentiment analysis project soon!